Speech Synthesizer Vst

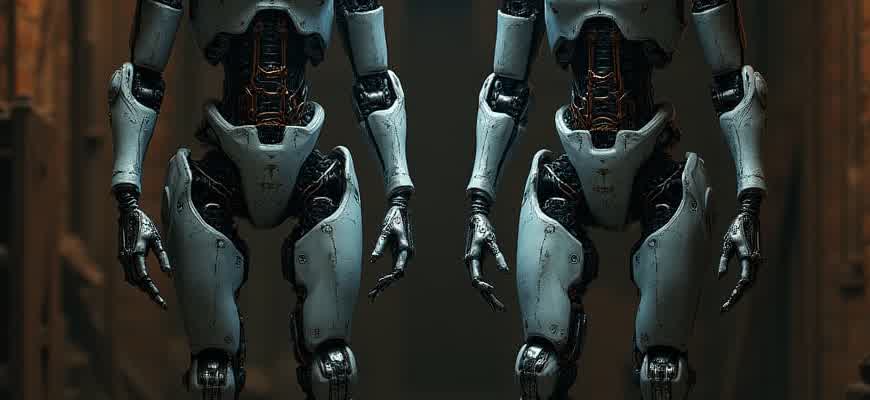

Speech synthesis technology has evolved significantly in recent years, and it now plays a key role in digital music production. Using virtual instruments (VSTs) designed for voice creation, producers can incorporate realistic or experimental vocal elements into their compositions without the need for human singers. These tools have opened up a range of possibilities, from realistic text-to-speech vocals to unique robotic voices that add distinct character to a track.

Types of Speech Synthesizer VSTs:

- Text-to-Speech (TTS) Plugins: Allow users to input text, which is then transformed into spoken words.

- Voicebank VSTs: Offer pre-recorded voices for more complex and natural speech synthesis.

- Formant-based Synthesis: Focus on creating vocal sounds through modulation of formants, ideal for experimental sounds.

Key Features of a Speech Synthesizer VST:

| Feature | Description |

|---|---|

| Customization | Ability to adjust tone, pitch, speed, and expression of the voice. |

| Integration | Seamless integration with DAWs like Ableton, FL Studio, and Logic Pro. |

| Multiple Voice Options | Support for a range of voices, from robotic to human-like. |

Using a speech synthesizer VST can transform your workflow, providing an entirely new layer of creative freedom when working with vocals or voice samples in music production.

Maximizing the Potential of Your Speech Synthesizer VST

To get the most out of your Speech Synthesizer VST, it’s essential to go beyond basic settings. The goal is to fine-tune every aspect of the plugin to fit your specific needs, whether you're working on a voiceover, creating virtual vocals for music production, or integrating text-to-speech into your project. The following tips can significantly improve your results and ensure your synthesized speech sounds as natural and clear as possible.

Here are some strategies to help you push your VST to its full potential:

Key Techniques for Optimizing Speech Synthesis

- Adjust Pitch and Speed: Tuning the pitch and speech rate can drastically change the emotional tone of the voice. Experiment with both to match the context of your project.

- Use Prosody Controls: Modify the rhythm and intonation to make the speech sound more human-like. Small changes in prosody can make a big difference in how natural the speech appears.

- Refine Vocal Character: If your synthesizer offers different vocal presets, try mixing them to create a unique voice that fits your specific tone or style.

- Work with Expression Parameters: Some VSTs provide expression controls like breathiness or emotion levels, which can help add nuance to the speech.

Additional Tips for Advanced Users

- Layer Multiple Synthesizers: Layering different speech synthesizers allows you to combine their strengths. One might handle the articulation while another provides better vocal texture.

- Integrate Effects: Adding reverb, delay, or EQ can help blend the synthetic voice more naturally into a track, especially in music production.

- Test in Context: Always check the synthesized speech in the context of your full mix. Adjustments you make in isolation might sound different when combined with other sounds.

Important Settings to Check

| Setting | Description | Recommended Adjustment |

|---|---|---|

| Pitch | Controls the frequency of the voice. High pitch gives a youthful tone, while low pitch sounds more authoritative. | Adjust based on the voice's intended character. |

| Speed | Adjusts the rate at which words are spoken. | Speed up for urgent speech, slow down for clear pronunciation. |

| Volume | The overall loudness of the synthesized speech. | Match the vocal volume with the overall mix. |

Remember, each VST has unique capabilities, so take time to explore its features. Small tweaks can often yield a more natural, dynamic result.

Choosing the Right Speech Synthesis Plugin for Your Production

When selecting a speech synthesizer plugin for your project, it’s important to understand both the technical requirements and the creative goals you're trying to achieve. The right plugin can enhance the auditory experience of your composition, whether you're working on a commercial ad, film, video game, or experimental track. There are many factors to consider, from voice quality and language support to ease of integration within your DAW.

The choice of synthesizer depends on whether you prioritize natural-sounding voices, customization options, or specific language support. Features like pitch control, emotional expression, and articulation flexibility can be crucial depending on the project’s needs. In this guide, we’ll explore key aspects to consider when selecting the best tool for your workflow.

Factors to Consider

- Voice Quality: A critical element, especially for projects requiring natural-sounding speech. Look for plugins that offer advanced algorithms for speech generation.

- Customization Features: Some plugins provide deep control over tone, pitch, and emotion, making them ideal for storytelling or creative projects.

- Language Support: Ensure the plugin supports the languages and dialects you need. Certain synthesizers offer a more extensive set of languages, while others focus on specific regions.

- Compatibility: Check if the plugin integrates seamlessly with your DAW. Some plugins may have better support for specific platforms or operating systems.

Key Features

- Emotional Expression: Many synthesizers offer the ability to add emotional variation to speech, which can enhance the believability of the voice.

- Voice Selection: A wide range of voices with different characteristics is available. Whether you need a robotic voice or a human-like tone, options are available.

- Real-time Control: Look for synthesizers with MIDI or automation support, allowing you to manipulate voice elements in real time during performance or mixing.

Comparison Table

| Plugin | Voice Quality | Customization | Language Support |

|---|---|---|---|

| Plugin A | Natural | Advanced | English, Spanish, French |

| Plugin B | Robotic | Basic | English, German |

| Plugin C | Human-like | Highly customizable | Multiple Languages |

Tip: If your project requires highly expressive speech, choose a plugin with emotional tone control for more dynamic results.

Installing and Configuring a Speech Synthesizer VST in Your DAW

Integrating a speech synthesizer VST into your DAW can enhance your productions by adding vocal elements without needing a human voice. These VST plugins simulate speech synthesis, allowing you to create spoken words and phrases from text input. The process of installation and setup can vary depending on the plugin and DAW you are using, but generally follows a few standard steps.

Before you begin, ensure that your DAW supports VST plugins and that you have downloaded the correct version of the speech synthesizer plugin for your system. Most plugins come with installation guides, but here are the key steps to get you started.

Steps to Install and Set Up a Speech Synthesizer VST

- Download the Plugin: Obtain the VST plugin from the developer’s website or a trusted source. Make sure to select the correct version (32-bit or 64-bit) for your operating system.

- Run the Installer: Launch the installation file and follow the prompts to install the plugin. Choose a folder where you want the VST to be stored or use the default directory.

- Scan for New Plugins in Your DAW: Open your DAW and navigate to the plugin settings. In most DAWs, you will find an option to rescan or refresh your plugin folder to detect new VSTs.

- Load the VST: Create a new track in your project and add the speech synthesizer as an effect or instrument, depending on the type of plugin. Ensure that the plugin is loaded properly by checking its presence in the DAW’s plugin list.

- Configure the Plugin: Once the plugin is loaded, adjust its settings, including voice selection, speed, pitch, and any other parameters specific to the plugin.

Important Considerations

Be aware that some VST plugins require additional software, such as voice libraries or external text-to-speech engines. Always refer to the plugin’s manual for any extra setup steps.

After installation, you can fine-tune the synthesizer’s voice output to suit your project’s needs. Many speech synthesizers allow you to type text directly into the plugin, while others may require external input methods like MIDI or automation.

Example of Supported DAWs and VST Integration

| DAW | VST Integration Process |

|---|---|

| FL Studio | Scan for plugins and drag the VST into the channel rack. |

| Ableton Live | Place the VST in the "Plug-ins" section of the browser and drag it to a track. |

| Logic Pro X | Load the VST via the AU plugin section. |

Understanding the Key Features of a High-Quality Speech Synthesizer VST

When choosing a speech synthesizer plugin for your music production or voiceover projects, it is important to evaluate several aspects that directly affect the overall output. A high-quality speech synthesizer should offer natural voice generation, flexibility in tone, and advanced controls to shape the vocal performance. The accuracy of speech articulation, including punctuation and emotion, plays a crucial role in ensuring that the final product sounds as realistic and engaging as possible.

Additionally, integration with Digital Audio Workstations (DAWs) and compatibility with other virtual instruments is a key consideration. The synthesizer must be easy to use, offering both intuitive controls and deep customization options. Let's break down the main features to look for in a premium speech synthesizer plugin.

Key Features of a High-Quality Speech Synthesizer VST

- Natural Voice Quality: Look for synthesizers that utilize advanced algorithms and AI models to produce highly realistic human-like voices. This ensures smooth pronunciation and accurate intonation.

- Customizable Emotions and Intonation: A good speech synthesizer should allow you to modify the emotional tone, volume, pitch, and speech rate to suit different contexts.

- Wide Range of Voices: Many VSTs offer a diverse selection of voices with different accents, genders, and ages, providing flexibility in your projects.

- Real-Time Processing: High-quality VSTs offer real-time performance with minimal latency, allowing for immediate feedback as you adjust parameters.

Advanced Control Options

- Phoneme Editing: The ability to fine-tune specific sounds (phonemes) within words is crucial for achieving precise articulation.

- Pitch and Tempo Adjustment: These controls let you alter the pitch and speed of speech, offering versatility for creative projects.

- Expressive Modifiers: Some VSTs provide sliders or automation for subtle changes in voice inflection, breathing patterns, and pauses.

Comparison Table

| Feature | Basic VST | High-Quality VST |

|---|---|---|

| Realistic Voice Quality | Basic | Advanced (AI-driven) |

| Customization Options | Limited | Extensive (emotion, pitch, tempo) |

| Phoneme Editing | Not Available | Available |

| Real-Time Processing | Possible, but lag | Instant, low latency |

Pro Tip: Choose a speech synthesizer that integrates seamlessly with your DAW, ensuring that you can work without any hassle and easily automate parameters for more dynamic performances.

Customizing Voice Parameters for Natural Sounding Speech Output

Fine-tuning the voice parameters in a speech synthesizer VST (Virtual Studio Technology) is crucial for achieving lifelike and convincing spoken audio. By adjusting specific settings, users can control the tone, cadence, and expression of the voice, making the output sound less mechanical and more like natural speech. These settings can vary widely across different synthesizer plugins, but many offer detailed controls that allow for precise modifications to the voice's characteristics.

To enhance the quality of speech output, it's essential to understand the most critical voice parameters and how they interact. Properly adjusting these can transform a robotic voice into one that feels human, with natural fluctuations in pitch, speed, and emphasis. Below are some of the primary settings to focus on when customizing voice output.

Key Parameters for Customization

- Pitch: Adjusting pitch helps create variation in the tone of speech, mimicking the rise and fall found in human conversation.

- Speed: The rate of speech can significantly impact the naturalness of the output. Too fast or too slow can make the speech sound unnatural.

- Volume: Modifying volume dynamics adds expressiveness, allowing the voice to sound more dynamic, as it would in a real conversation.

- Emphasis: Highlighting certain syllables or words gives the voice more emotional depth and variation.

- Breathiness: Adding slight breath sounds can enhance the natural feel, making the voice sound less artificial.

Parameter Adjustments for Realism

- Start by adjusting the pitch in small increments to match the desired tone. Use variations in pitch to avoid a monotone output.

- Next, tweak the speech speed to ensure it flows smoothly and maintains natural pacing.

- Focus on adding volume changes at key moments to create natural inflections and emphasize important words.

- Apply emphasis sparingly, ensuring that it sounds intentional and not overdone.

- Finally, add a subtle amount of breathiness to enhance realism, but avoid excessive use, as it may detract from clarity.

Important Considerations

Properly balancing all these factors will significantly enhance the voice's natural quality. Too much emphasis on any one parameter, such as pitch or breathiness, can make the speech sound forced or exaggerated.

Voice Parameter Table

| Parameter | Description | Recommended Range |

|---|---|---|

| Pitch | Controls the highness or lowness of the voice. | -3 to +3 semitones |

| Speed | Adjusts the rate at which the speech is produced. | 85% to 120% of default speed |

| Volume | Sets the loudness and dynamic range of the voice. | 1 to 1.5x default |

| Emphasis | Strengthens specific words or syllables. | 0 to 5 (subtle) |

| Breathiness | Adds subtle breath sounds to the speech. | Low to moderate (0.2 to 0.5) |

Integrating Speech Synthesizer VST into Your Music or Audio Production Workflow

Adding a speech synthesizer plugin to your audio production setup can bring an entirely new dimension to your sound design and vocal production. With these tools, you can create spoken word samples, generate narrative elements, or even experiment with vocal textures that would be difficult to capture otherwise. A VST speech synthesizer opens up new possibilities for electronic musicians, sound designers, and game developers who want to incorporate synthesized speech seamlessly into their projects.

To effectively integrate a speech synthesizer VST into your workflow, it's crucial to understand how to make the most of its capabilities. Whether you're using it to create voiceovers, add unique vocal elements, or design sound effects, proper routing and control will be key. Here’s how to optimize the process:

Key Steps to Integrate a Speech Synthesizer VST

- Install the VST Plugin: Ensure that the plugin is correctly installed and visible in your DAW's plugin directory.

- Setup Audio Routing: Route the output of the synthesizer to a separate track for ease of mixing.

- Choose the Right Voice: Select the voice and language that matches your project’s needs, adjusting pitch, speed, and tone as necessary.

- Automate Parameters: Use automation lanes to adjust parameters like pitch and modulation for more dynamic speech synthesis.

Recommended Workflow for Efficient Integration

- Start by composing the music or sound design elements in your DAW.

- Place the speech synthesizer VST on a dedicated track for vocal manipulation.

- Adjust the timing and phrasing of the speech to sync with your music or effects.

- Fine-tune the modulation of the voice to match the desired emotional tone of the track.

Pro Tip: Automating the speech synthesis' tempo and pitch can help blend the synthetic voice more naturally into your track.

Considerations When Using Speech Synthesizers

Speech synthesis can sometimes sound artificial or robotic if not properly managed. The key is to adjust the nuances of the voice to match the emotional tone and rhythmic flow of your production. Pay attention to:

| Aspect | Consideration |

|---|---|

| Pronunciation | Ensure the voice sounds natural by adjusting speech parameters. |

| Timing | Sync the speech with your music to avoid unnatural breaks or pauses. |

| Vocal Effects | Apply reverb or EQ to blend the synthesized speech with the overall mix. |

Common Mistakes When Using Speech Synthesizer VSTs and How to Avoid Them

When integrating a speech synthesizer VST into your production, there are several common pitfalls that can lead to unnatural or distracting results. Many users overlook essential adjustments, which can result in robotic-sounding vocals, poor synchronization, or an unnatural blend with other audio elements. Understanding the frequent mistakes and knowing how to avoid them will help you achieve smoother and more convincing synthesized speech.

By being mindful of certain details and fine-tuning your approach, you can improve the overall quality of your output. Below are some typical errors and their solutions:

Common Pitfalls and How to Fix Them

- Neglecting Speech Timing: One of the most frequent mistakes is poor timing. The speech might not align well with the music or sound design elements, making it sound disconnected.

- Overusing Standard Voices: Using the default voice settings without modification often leads to a flat, unengaging result. It’s essential to experiment with pitch, tone, and modulation.

- Not Fine-Tuning Pronunciation: Some speech synthesizers can mispronounce certain words or phrases, making the voice sound unnatural.

- Ignoring EQ and Effects: Synthesized speech can often sound sterile if left untreated. Applying EQ and other effects, like reverb or compression, can help integrate the voice into your mix.

How to Avoid These Mistakes

- Use automation to adjust the timing of the speech, ensuring it matches the tempo and rhythm of the track.

- Customize the voice by experimenting with pitch, modulation, and speed to create a more dynamic and natural sound.

- Listen closely to the pronunciation and manually adjust settings for clearer, more accurate articulation.

- Process the speech with EQ, reverb, and other effects to make it fit better with the overall mix.

Tip: Even small adjustments in modulation and timing can significantly enhance the believability of synthetic speech.

Key Areas to Focus On

| Problem | Solution |

|---|---|

| Robot-like Sound | Modify pitch and speed to introduce more variation and human-like nuances. |

| Unnatural Timing | Use automation to sync speech with the surrounding audio tracks more effectively. |

| Disconnected Vocals | Apply effects such as reverb and EQ to better integrate the voice into the mix. |

How to Craft Distinctive Vocal Effects with a Speech Synthesizer VST

Creating unique vocal effects using a speech synthesizer VST allows for endless possibilities in sound design. Whether you're aiming for robotic voices, eerie whispers, or harmonic textures, the tools within the synthesizer and external effects can significantly enhance your production. Understanding the basic functions and how to manipulate the parameters will enable you to bring your vocal creations to life in innovative ways.

To achieve distinct vocal effects, it’s important to experiment with pitch, modulation, and processing techniques. By carefully adjusting these aspects, you can transform a simple spoken phrase into something entirely unique. Additionally, combining speech synthesis with other production tools will allow you to craft complex layers and textures that elevate the overall sound.

Key Techniques for Unique Vocal Effects

- Pitch Shifting: Altering the pitch of the voice can create dramatic effects. For instance, a slight pitch modulation can make the voice sound robotic, while extreme shifts can give a synthetic or alien quality.

- Time Stretching: Stretching or compressing the vocal's timing can result in eerie, unnatural effects, ideal for creating suspenseful atmospheres.

- Granular Synthesis: Using granular techniques, you can break the vocal into small grains and rearrange them to produce stuttered, glitchy, or ambient effects.

External Effects to Enhance Speech Synthesizer Vocals

- Reverb and Delay: These effects add space and depth to vocals. Experiment with long reverb tails and short delays for more experimental soundscapes.

- Distortion and Saturation: Adding distortion can make the voice sound harsher or more aggressive, while saturation can add warmth and a sense of fullness.

- Chorus and Flanger: Modulation effects like chorus and flanger can introduce movement to the voice, making it sound as if it’s coming from multiple sources or creating a robotic timbre.

Effective Use of Modulation

| Modulation Type | Effect |

|---|---|

| Phaser | Creates sweeping, hypnotic effects by shifting the phase of the signal. |

| Auto-Tune | Allows you to manipulate pitch in real-time, giving the voice a polished, robotic, or musical quality. |

| Vibrato | Introduces subtle pitch variations, making the voice sound more organic or emotional. |

Tip: Layering multiple effects together, like pitch-shifting with reverb and delay, can create complex, dynamic vocal textures that stand out in any production.